Mastering Azure Kubernetes Service (AKS): Best Practices for Enterprise Deployments

AKS Best practices

Abiola Akinbade

12/27/20243 min read

Organizations now use Kubernetes to run containerized applications at scale. Azure Kubernetes Service (AKS) offers a managed platform to deploy and scale these applications with less work.

Setting up AKS for large companies brings challenges that need careful planning. This guide covers key strategies for successful AKS deployments in business settings.

Building a Strong Foundation

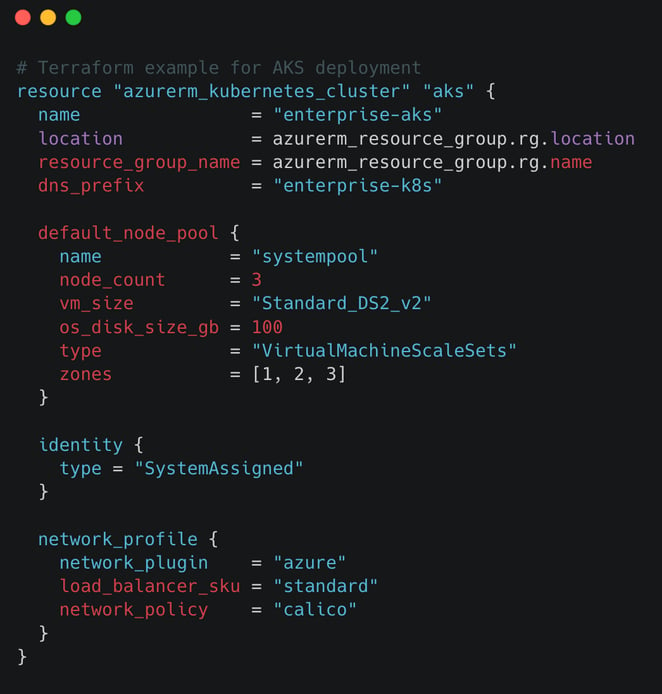

Use Infrastructure as Code

Start your AKS deployment with code-based infrastructure. Tools like Terraform or Azure Bicep help create consistent setups across all environments.

This approach lets you track changes, review code, and test before deployment. It helps prevent configuration drift and mistakes.

See this nice document that can help you deploy a base setup using terraform: https://learn.microsoft.com/en-us/azure/aks/learn/quick-kubernetes-deploy-terraform

Set Up Hub-Spoke Networks

For most companies, a hub-spoke network structure works best for AKS:

Hub network: Contains shared services like firewalls and gateways

Spoke networks: Houses AKS clusters with proper separation

Network peering: Links hub to spokes with controlled routing

This setup gives you central security controls while keeping workloads separate.

Review the hub and spoke architecture here:

https://learn.microsoft.com/en-us/azure/architecture/networking/architecture/hub-spoke

Use Private Clusters

Make your AKS clusters private to limit API server access to your network only. This cuts down exposure to the internet.

Plan for Multiple Regions

If you need high availability across regions, consider:

Setting up AKS clusters in multiple regions

Using Azure Front Door or Traffic Manager for global routing

Using geo-replicated storage for data consistency

Deploying with tools like Flux or ArgoCD across all clusters

https://learn.microsoft.com/en-us/azure/architecture/reference-architectures/containers/aks-multi-region/aks-multi-cluster

Security in Layers

Connect to Entra ID

Link AKS with Entra ID for better identity management. This gives you:

Single sign-on for cluster access

Group-based access control

Central identity management and audit logs

https://learn.microsoft.com/en-us/azure/aks/azure-ad-integration-cli

Secure the Network

Add multiple layers of network protection:

Network Security Groups to filter subnet traffic

Azure Firewall for outbound traffic control

Network Policy for pod-to-pod traffic rules

Service mesh for encryption and traffic control

https://learn.microsoft.com/en-us/azure/aks/concepts-security

Protect Containers

Make your container pipeline secure:

Scan images with Azure Container Registry and Defender for Cloud

Use Pod Security Standards to limit what pods can do

Add OPA Gatekeeper for policy enforcement

Store secrets in Azure Key Vault

Apply Policies

Use Azure Policy for AKS to enforce company standards https://learn.microsoft.com/en-us/azure/aks/use-azure-policy

Running AKS Better

Plan Your Node Pools

Use a smart approach to node pools:

System node pools: For system services with proper taints

Workload-specific pools: Group by:

Resource needs (memory, compute, GPU)

Compliance needs (PCI-DSS, HIPAA)

Update frequency needs

https://learn.microsoft.com/en-us/azure/aks/manage-node-pools

Create an Upgrade Plan

Make a strong process for cluster upgrades:

Set a monthly update schedule

Test upgrades in test environments first

Use blue/green deployment for critical workloads

Use node surge settings to reduce downtime

Monitor Everything

Set up complete monitoring with:

Azure Monitor for Containers for logs and metrics

Prometheus and Grafana for detailed metrics

Azure Log Analytics for central log storage

Jaeger or Application Insights for tracing

Create dashboards for:

Executives

Operations teams

Developers

Security teams

https://learn.microsoft.com/en-us/azure/azure-monitor/containers/monitor-kubernetes

Plan for Disasters

Set up backup and recovery:

Use Velero for cluster and app backup

Store all settings in Git repos

Spread workloads across regions

Test disaster recovery quarterly

Cut Costs

Right-Size Resources

Use these tools to match resources to needs:

Horizontal Pod Autoscaler to scale pods based on metrics

Cluster Autoscaler to add or remove nodes

KEDA for event-based scaling

Control Spending

Add cost controls:

Resource Quotas for namespace limits

Azure Cost Management with tags

Spot Instances for non-critical workloads

Reserved Instances for stable workloads

Connect to DevOps Tools

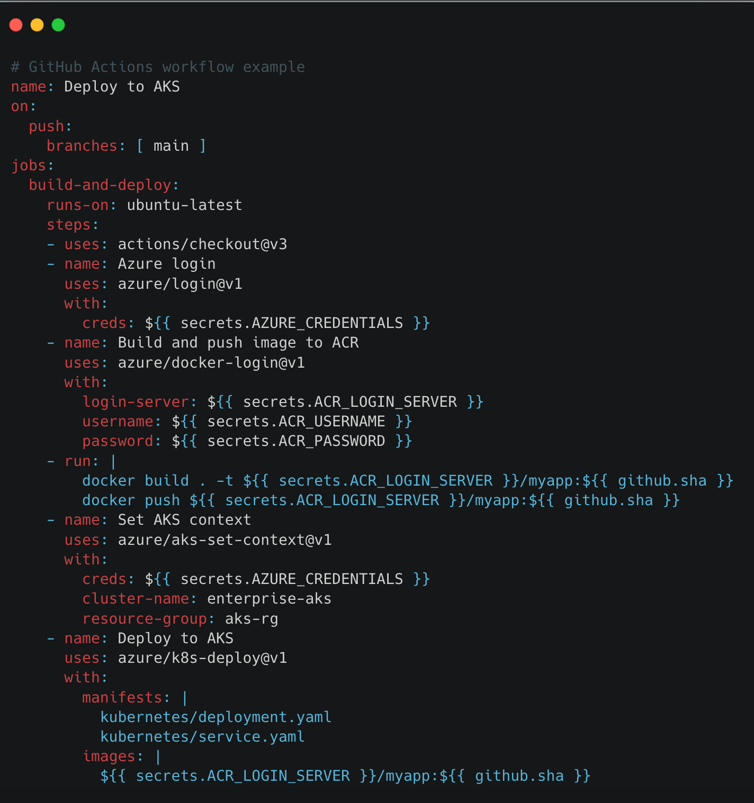

Set Up CI/CD Pipelines

Connect AKS with build and deploy pipelines:

Use Azure DevOps or GitHub Actions for automation

Set up GitOps with Flux or ArgoCD

Add canary or blue/green deployments

Add Service Mesh

For complex microservices, add a service mesh:

Azure Service Mesh Interface for Azure integration

Features include:

Canary deployments

Circuit breaking

TLS encryption

Traffic splitting

New Features to Try

Azure Arc

For hybrid setups, try Azure Arc for Kubernetes. This gives you the same policies and monitoring across on-premises and cloud. https://learn.microsoft.com/en-us/azure/azure-arc/kubernetes/overview

Event-Driven Design

Try event-driven patterns with:

KEDA for event-based scaling

Event Grid for events

Dapr for microservice development

Success with AKS needs attention to architecture, security, operations, and cost control. These practices help you build secure, scalable Kubernetes environments that deliver business value.

AKS keeps changing. Set up a team to track new features and keep your approach current to get the most from your Kubernetes investment.